Dot product

In mathematics, the dot product or scalar product[note 1] is an algebraic operation that takes two equal-length sequences of numbers (usually coordinate vectors) and returns a single number. In Euclidean geometry, the dot product of the Cartesian coordinates of two vectors is widely used and often called "the" inner product (or rarely projection product) of Euclidean space even though it is not the only inner product that can be defined on Euclidean space; see also inner product space.

Algebraically, the dot product is the sum of the products of the corresponding entries of the two sequences of numbers. Geometrically, it is the product of the Euclidean magnitudes of the two vectors and the cosine of the angle between them. These definitions are equivalent when using Cartesian coordinates. In modern geometry, Euclidean spaces are often defined by using vector spaces. In this case, the dot product is used for defining lengths (the length of a vector is the square root of the dot product of the vector by itself) and angles (the cosine of the angle of two vectors is the quotient of their dot product by the product of their lengths).

The name "dot product" is derived from the centered dot " · " that is often used to designate this operation; the alternative name "scalar product" emphasizes that the result is a scalar, rather than a vector, as is the case for the vector product in three-dimensional space.

Contents

1 Definition

1.1 Algebraic definition

1.2 Geometric definition

1.3 Scalar projection and first properties

1.4 Equivalence of the definitions

2 Properties

2.1 Application to the law of cosines

3 Triple product

4 Physics

5 Generalizations

5.1 Complex vectors

5.2 Inner product

5.3 Functions

5.4 Weight function

5.5 Dyadics and matrices

5.6 Tensors

6 Computation

6.1 Algorithms

6.2 Libraries

7 See also

8 Notes

9 References

10 External links

Definition

The dot product may be defined algebraically or geometrically. The geometric definition is based on the notions of angle and distance (magnitude of vectors). The equivalence of these two definitions relies on having a Cartesian coordinate system for Euclidean space.

In modern presentations of Euclidean geometry, the points of space are defined in terms of their Cartesian coordinates, and Euclidean space itself is commonly identified with the real coordinate space Rn. In such a presentation, the notions of length and angles are defined by means of the dot product. The length of a vector is defined as the square root of the dot product of the vector by itself, and the cosine of the (non oriented) angle of two vectors of length one is defined as their dot product. So the equivalence of the two definitions of the dot product is a part of the equivalence of the classical and the modern formulations of Euclidean geometry.

Algebraic definition

The dot product of two vectors a = [a1, a2, …, an] and b = [b1, b2, …, bn] is defined as:[1]

- a⋅b=∑i=1naibi=a1b1+a2b2+⋯+anbn{displaystyle mathbf {color {red}a} cdot mathbf {color {blue}b} =sum _{i=1}^{n}{color {red}a}_{i}{color {blue}b}_{i}={color {red}a}_{1}{color {blue}b}_{1}+{color {red}a}_{2}{color {blue}b}_{2}+cdots +{color {red}a}_{n}{color {blue}b}_{n}}

where Σ denotes summation and n is the dimension of the vector space. For instance, in three-dimensional space, the dot product of vectors [1, 3, −5] and [4, −2, −1] is:

- [1,3,−5]⋅[4,−2,−1]=(1×4)+(3×−2)+(−5×−1)=4−6+5=3{displaystyle {begin{aligned} [{color {red}1,3,-5}]cdot [{color {blue}4,-2,-1}]&=({color {red}1}times {color {blue}4})+({color {red}3}times {color {blue}-2})+({color {red}-5}times {color {blue}-1})\&=4-6+5\&=3end{aligned}}}

If vectors are identified with row matrices, the dot product can also be written as a matrix product

- a⋅b=ab⊤,{displaystyle mathbf {color {red}a} cdot mathbf {color {blue}b} =mathbf {color {red}a} mathbf {color {blue}b} ^{top },}

where b⊤{displaystyle mathbf {color {blue}b} ^{top }}

Expressing the above example in this way, a 1 × 3 matrix (row vector) is multiplied by a 3 × 1 matrix (column vector) to get a 1 × 1 matrix that is identified with its unique entry:

[13−5][4−2−1]=3{displaystyle {begin{bmatrix}color {red}1&color {red}3&color {red}-5end{bmatrix}}{begin{bmatrix}color {blue}4\color {blue}-2\color {blue}-1end{bmatrix}}=color {purple}3}.

Geometric definition

Illustration showing how to find the angle between vectors using the dot product

In Euclidean space, a Euclidean vector is a geometric object that possesses both a magnitude and a direction. A vector can be pictured as an arrow. Its magnitude is its length, and its direction is the direction that the arrow points to. The magnitude of a vector a is denoted by ‖a‖{displaystyle left|mathbf {a} right|}

- a⋅b=‖a‖ ‖b‖cos(θ),{displaystyle mathbf {a} cdot mathbf {b} =|mathbf {a} | |mathbf {b} |cos(theta ),}

where θ is the angle between a and b.

In particular, if a and b are orthogonal (the angle between vectors is 90°) then due to cos(90∘)=0{displaystyle cos(90^{circ })=0}

- a⋅b=0.{displaystyle mathbf {a} cdot mathbf {b} =0.}

At the other extreme, if they are codirectional, then the angle between them is 0° and

- a⋅b=‖a‖‖b‖{displaystyle mathbf {a} cdot mathbf {b} =left|mathbf {a} right|,left|mathbf {b} right|}

This implies that the dot product of a vector a with itself is

- a⋅a=‖a‖2,{displaystyle mathbf {a} cdot mathbf {a} =left|mathbf {a} right|^{2},}

which gives

- ‖a‖=a⋅a,{displaystyle left|mathbf {a} right|={sqrt {mathbf {a} cdot mathbf {a} }},}

the formula for the Euclidean length of the vector.

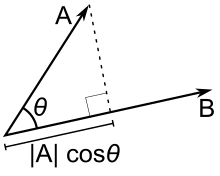

Scalar projection and first properties

Scalar projection

The scalar projection (or scalar component) of a Euclidean vector a in the direction of a Euclidean vector b is given by

- ab=‖a‖cosθ,{displaystyle a_{b}=left|mathbf {a} right|cos theta ,}

where θ is the angle between a and b.

In terms of the geometric definition of the dot product, this can be rewritten

- ab=a⋅b^,{displaystyle a_{b}=mathbf {a} cdot {widehat {mathbf {b} }},}

where b^=b/‖b‖{displaystyle {widehat {mathbf {b} }}=mathbf {b} /left|mathbf {b} right|}

Distributive law for the dot product

The dot product is thus characterized geometrically by[4]

- a⋅b=ab‖b‖=ba‖a‖.{displaystyle mathbf {a} cdot mathbf {b} =a_{b}left|mathbf {b} right|=b_{a}left|mathbf {a} right|.}

The dot product, defined in this manner, is homogeneous under scaling in each variable, meaning that for any scalar α,

- (αa)⋅b=α(a⋅b)=a⋅(αb).{displaystyle (alpha mathbf {a} )cdot mathbf {b} =alpha (mathbf {a} cdot mathbf {b} )=mathbf {a} cdot (alpha mathbf {b} ).}

It also satisfies a distributive law, meaning that

- a⋅(b+c)=a⋅b+a⋅c.{displaystyle mathbf {a} cdot (mathbf {b} +mathbf {c} )=mathbf {a} cdot mathbf {b} +mathbf {a} cdot mathbf {c} .}

These properties may be summarized by saying that the dot product is a bilinear form. Moreover, this bilinear form is positive definite, which means that

a⋅a{displaystyle mathbf {a} cdot mathbf {a} }

is never negative and is zero if and only if a=0{displaystyle mathbf {a} =mathbf {0} }

Equivalence of the definitions

If e1, ..., en are the standard basis vectors in Rn, then we may write

- a=[a1,…,an]=∑iaieib=[b1,…,bn]=∑ibiei.{displaystyle {begin{aligned}mathbf {a} &=[a_{1},dots ,a_{n}]=sum _{i}a_{i}mathbf {e} _{i}\mathbf {b} &=[b_{1},dots ,b_{n}]=sum _{i}b_{i}mathbf {e} _{i}.end{aligned}}}

The vectors ei are an orthonormal basis, which means that they have unit length and are at right angles to each other. Hence since these vectors have unit length

- ei⋅ei=1{displaystyle mathbf {e} _{i}cdot mathbf {e} _{i}=1}

and since they form right angles with each other, if i ≠ j,

- ei⋅ej=0.{displaystyle mathbf {e} _{i}cdot mathbf {e} _{j}=0.}

Thus in general we can say that:

- ei⋅ej=δij.{displaystyle mathbf {e} _{i}cdot mathbf {e} _{j}=delta _{ij}.}

Where δ ij is the Kronecker delta.

Also, by the geometric definition, for any vector ei and a vector a, we note

- a⋅ei=‖a‖‖ei‖cosθ=‖a‖cosθ=ai,{displaystyle mathbf {a} cdot mathbf {e} _{i}=left|mathbf {a} right|,left|mathbf {e} _{i}right|cos theta =left|mathbf {a} right|cos theta =a_{i},}

where ai is the component of vector a in the direction of ei.

Now applying the distributivity of the geometric version of the dot product gives

- a⋅b=a⋅∑ibiei=∑ibi(a⋅ei)=∑ibiai=∑iaibi,{displaystyle mathbf {a} cdot mathbf {b} =mathbf {a} cdot sum _{i}b_{i}mathbf {e} _{i}=sum _{i}b_{i}(mathbf {a} cdot mathbf {e} _{i})=sum _{i}b_{i}a_{i}=sum _{i}a_{i}b_{i},}

which is precisely the algebraic definition of the dot product. So the geometric dot product equals the algebraic dot product.

Properties

The dot product fulfills the following properties if a, b, and c are real vectors and r is a scalar.[1][2]

Commutative:

- a⋅b=b⋅a,{displaystyle mathbf {a} cdot mathbf {b} =mathbf {b} cdot mathbf {a} ,}

- which follows from the definition (θ is the angle between a and b):

- a⋅b=‖a‖‖b‖cosθ=‖b‖‖a‖cosθ=b⋅a.{displaystyle mathbf {a} cdot mathbf {b} =left|mathbf {a} right|left|mathbf {b} right|cos theta =left|mathbf {b} right|left|mathbf {a} right|cos theta =mathbf {b} cdot mathbf {a} .}

- a⋅b=b⋅a,{displaystyle mathbf {a} cdot mathbf {b} =mathbf {b} cdot mathbf {a} ,}

Distributive over vector addition:

- a⋅(b+c)=a⋅b+a⋅c.{displaystyle mathbf {a} cdot (mathbf {b} +mathbf {c} )=mathbf {a} cdot mathbf {b} +mathbf {a} cdot mathbf {c} .}

- a⋅(b+c)=a⋅b+a⋅c.{displaystyle mathbf {a} cdot (mathbf {b} +mathbf {c} )=mathbf {a} cdot mathbf {b} +mathbf {a} cdot mathbf {c} .}

Bilinear:

- a⋅(rb+c)=r(a⋅b)+(a⋅c).{displaystyle mathbf {a} cdot (rmathbf {b} +mathbf {c} )=r(mathbf {a} cdot mathbf {b} )+(mathbf {a} cdot mathbf {c} ).}

- a⋅(rb+c)=r(a⋅b)+(a⋅c).{displaystyle mathbf {a} cdot (rmathbf {b} +mathbf {c} )=r(mathbf {a} cdot mathbf {b} )+(mathbf {a} cdot mathbf {c} ).}

Scalar multiplication:

- (c1a)⋅(c2b)=c1c2(a⋅b).{displaystyle (c_{1}mathbf {a} )cdot (c_{2}mathbf {b} )=c_{1}c_{2}(mathbf {a} cdot mathbf {b} ).}

- (c1a)⋅(c2b)=c1c2(a⋅b).{displaystyle (c_{1}mathbf {a} )cdot (c_{2}mathbf {b} )=c_{1}c_{2}(mathbf {a} cdot mathbf {b} ).}

Not associative because the dot product between a scalar (a ⋅ b) and a vector (c) is not defined, which means that the expressions involved in the associative property, (a ⋅ b) ⋅ c or a ⋅ (b ⋅ c) are both ill-defined.[5] Note however that the previously mentioned scalar multiplication property is sometimes called the "associative law for scalar and dot product"[6] or one can say that "the dot product is associative with respect to scalar multiplication" because c (a ⋅ b) = (c a) ⋅ b = a ⋅ (c b).[7]

Orthogonal:

- Two non-zero vectors a and b are orthogonal if and only if a ⋅ b = 0.

No cancellation:

- Unlike multiplication of ordinary numbers, where if ab = ac, then b always equals c unless a is zero, the dot product does not obey the cancellation law:

- If a ⋅ b = a ⋅ c and a ≠ 0, then we can write: a ⋅ (b − c) = 0 by the distributive law; the result above says this just means that a is perpendicular to (b − c), which still allows (b − c) ≠ 0, and therefore b ≠ c.

Product Rule: If a and b are functions, then the derivative (denoted by a prime ′) of a ⋅ b is a′ ⋅ b + a ⋅ b′.

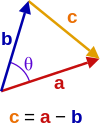

Application to the law of cosines

Triangle with vector edges a and b, separated by angle θ.

Given two vectors a and b separated by angle θ (see image right), they form a triangle with a third side c = a − b. The dot product of this with itself is:

- c⋅c=(a−b)⋅(a−b)=a⋅a−a⋅b−b⋅a+b⋅b=a2−a⋅b−a⋅b+b2=a2−2a⋅b+b2c2=a2+b2−2abcosθ{displaystyle {begin{aligned}mathbf {color {gold}c} cdot mathbf {color {gold}c} &=(mathbf {color {red}a} -mathbf {color {blue}b} )cdot (mathbf {color {red}a} -mathbf {color {blue}b} )\&=mathbf {color {red}a} cdot mathbf {color {red}a} -mathbf {color {red}a} cdot mathbf {color {blue}b} -mathbf {color {blue}b} cdot mathbf {color {red}a} +mathbf {color {blue}b} cdot mathbf {color {blue}b} \&={color {red}a}^{2}-mathbf {color {red}a} cdot mathbf {color {blue}b} -mathbf {color {red}a} cdot mathbf {color {blue}b} +{color {blue}b}^{2}\&={color {red}a}^{2}-2mathbf {color {red}a} cdot mathbf {color {blue}b} +{color {blue}b}^{2}\{color {gold}c}^{2}&={color {red}a}^{2}+{color {blue}b}^{2}-2{color {red}a}{color {blue}b}cos {color {purple}theta }\end{aligned}}}

which is the law of cosines.

Triple product

There are two ternary operations involving dot product and cross product.

The scalar triple product of three vectors is defined as

- a⋅(b×c)=b⋅(c×a)=c⋅(a×b).{displaystyle mathbf {a} cdot (mathbf {b} times mathbf {c} )=mathbf {b} cdot (mathbf {c} times mathbf {a} )=mathbf {c} cdot (mathbf {a} times mathbf {b} ).}

Its value is the determinant of the matrix whose columns are the Cartesian coordinates of the three vectors. It is the signed volume of the parallelogram defined by the three vectors.

The vector triple product is defined by[1][2]

- a×(b×c)=b(a⋅c)−c(a⋅b).{displaystyle mathbf {a} times (mathbf {b} times mathbf {c} )=mathbf {b} (mathbf {a} cdot mathbf {c} )-mathbf {c} (mathbf {a} cdot mathbf {b} ).}

This identity, also known as Lagrange's formula may be remembered as "BAC minus CAB", keeping in mind which vectors are dotted together. This formula finds application in simplifying vector calculations in physics.

Physics

In physics, vector magnitude is a scalar in the physical sense, i.e. a physical quantity independent of the coordinate system, expressed as the product of a numerical value and a physical unit, not just a number. The dot product is also a scalar in this sense, given by the formula, independent of the coordinate system. Examples include:[8][9]

Mechanical work is the dot product of force and displacement vectors,

Magnetic flux is the dot product of the magnetic field and the vector area,

Power is the dot product of force and velocity.

Generalizations

Complex vectors

For vectors with complex entries, using the given definition of the dot product would lead to quite different properties. For instance the dot product of a vector with itself would be an arbitrary complex number, and could be zero without the vector being the zero vector (such vectors are called isotropic); this in turn would have consequences for notions like length and angle. Properties such as the positive-definite norm can be salvaged at the cost of giving up the symmetric and bilinear properties of the scalar product, through the alternative definition[10][1]

- a⋅b=∑aibi¯,{displaystyle mathbf {a} cdot mathbf {b} =sum {a_{i}{overline {b_{i}}}},}

where ai is the complex conjugate of ai. Then the scalar product of any vector with itself is a non-negative real number, and it is nonzero except for the zero vector. However this scalar product is thus sesquilinear rather than bilinear: it is conjugate linear and not linear in a, and the scalar product is not symmetric, since

- a⋅b=b⋅a¯.{displaystyle mathbf {a} cdot mathbf {b} ={overline {mathbf {b} cdot mathbf {a} }}.}

The angle between two complex vectors is then given by

- cosθ=Re(a⋅b)‖a‖‖b‖.{displaystyle cos theta ={frac {operatorname {Re} (mathbf {a} cdot mathbf {b} )}{left|mathbf {a} right|,left|mathbf {b} right|}}.}

This type of scalar product is nevertheless useful, and leads to the notions of Hermitian form and of general inner product spaces.

Inner product

The inner product generalizes the dot product to abstract vector spaces over a field of scalars, being either the field of real numbers R{displaystyle mathbb {R} }

The inner product of two vectors over the field of complex numbers is, in general, a complex number, and is sesquilinear instead of bilinear. An inner product space is a normed vector space, and the inner product of a vector with itself is real and positive-definite.

Functions

The dot product is defined for vectors that have a finite number of entries. Thus these vectors can be regarded as discrete functions: a length-n vector u is, then, a function with domain {k ∈ ℕ ∣ 1 ≤ k ≤ n}, and ui is a notation for the image of i by the function/vector u.

This notion can be generalized to continuous functions: just as the inner product on vectors uses a sum over corresponding components, the inner product on functions is defined as an integral over some interval a ≤ x ≤ b (also denoted [a, b]):[1]

- ⟨u,v⟩=∫abu(x)v(x)dx{displaystyle leftlangle u,vrightrangle =int _{a}^{b}u(x)v(x)dx}

Generalized further to complex functions ψ(x) and χ(x), by analogy with the complex inner product above, gives[1]

- ⟨ψ,χ⟩=∫abψ(x)χ(x)¯dx.{displaystyle leftlangle psi ,chi rightrangle =int _{a}^{b}psi (x){overline {chi (x)}}dx.}

Weight function

Inner products can have a weight function, i.e. a function which weights each term of the inner product with a value. Explicitly, the inner product of functions u(x){displaystyle u(x)}

- ⟨u,v⟩=∫abr(x)u(x)v(x)dx.{displaystyle leftlangle u,vrightrangle =int _{a}^{b}r(x)u(x)v(x)dx.}

Dyadics and matrices

Matrices have the Frobenius inner product, which is analogous to the vector inner product. It is defined as the sum of the products of the corresponding components of two matrices A and B having the same size:

- A:B=∑i∑jAijBij¯=tr(BHA)=tr(ABH).{displaystyle mathbf {A} :mathbf {B} =sum _{i}sum _{j}A_{ij}{overline {B_{ij}}}=mathrm {tr} (mathbf {B} ^{mathrm {H} }mathbf {A} )=mathrm {tr} (mathbf {A} mathbf {B} ^{mathrm {H} }).}

A:B=∑i∑jAijBij=tr(BTA)=tr(ABT)=tr(ATB)=tr(BAT).{displaystyle mathbf {A} :mathbf {B} =sum _{i}sum _{j}A_{ij}B_{ij}=mathrm {tr} (mathbf {B} ^{mathrm {T} }mathbf {A} )=mathrm {tr} (mathbf {A} mathbf {B} ^{mathrm {T} })=mathrm {tr} (mathbf {A} ^{mathrm {T} }mathbf {B} )=mathrm {tr} (mathbf {B} mathbf {A} ^{mathrm {T} }).}(For real matrices)

Dyadics have a dot product and "double" dot product defined on them, see Dyadics (Product of dyadic and dyadic) for their definitions.

Tensors

The inner product between a tensor of order n and a tensor of order m is a tensor of order n + m − 2, see tensor contraction for details.

Computation

Algorithms

The straightforward algorithm for calculating a floating-point dot product of vectors can suffer from catastrophic cancellation. To avoid this, approaches such as the Kahan summation algorithm are used.

Libraries

A dot product function is included in BLAS level 1.

See also

- Cauchy–Schwarz inequality

- Cross product

- Matrix multiplication

- Metric tensor

Notes

^ The term scalar product is often also used more generally to mean a symmetric bilinear form, for example for a pseudo-Euclidean space.[citation needed]

References

^ abcdef S. Lipschutz; M. Lipson (2009). Linear Algebra (Schaum’s Outlines) (4th ed.). McGraw Hill. ISBN 978-0-07-154352-1..mw-parser-output cite.citation{font-style:inherit}.mw-parser-output .citation q{quotes:"""""""'""'"}.mw-parser-output .citation .cs1-lock-free a{background:url("//upload.wikimedia.org/wikipedia/commons/thumb/6/65/Lock-green.svg/9px-Lock-green.svg.png")no-repeat;background-position:right .1em center}.mw-parser-output .citation .cs1-lock-limited a,.mw-parser-output .citation .cs1-lock-registration a{background:url("//upload.wikimedia.org/wikipedia/commons/thumb/d/d6/Lock-gray-alt-2.svg/9px-Lock-gray-alt-2.svg.png")no-repeat;background-position:right .1em center}.mw-parser-output .citation .cs1-lock-subscription a{background:url("//upload.wikimedia.org/wikipedia/commons/thumb/a/aa/Lock-red-alt-2.svg/9px-Lock-red-alt-2.svg.png")no-repeat;background-position:right .1em center}.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registration{color:#555}.mw-parser-output .cs1-subscription span,.mw-parser-output .cs1-registration span{border-bottom:1px dotted;cursor:help}.mw-parser-output .cs1-ws-icon a{background:url("//upload.wikimedia.org/wikipedia/commons/thumb/4/4c/Wikisource-logo.svg/12px-Wikisource-logo.svg.png")no-repeat;background-position:right .1em center}.mw-parser-output code.cs1-code{color:inherit;background:inherit;border:inherit;padding:inherit}.mw-parser-output .cs1-hidden-error{display:none;font-size:100%}.mw-parser-output .cs1-visible-error{font-size:100%}.mw-parser-output .cs1-maint{display:none;color:#33aa33;margin-left:0.3em}.mw-parser-output .cs1-subscription,.mw-parser-output .cs1-registration,.mw-parser-output .cs1-format{font-size:95%}.mw-parser-output .cs1-kern-left,.mw-parser-output .cs1-kern-wl-left{padding-left:0.2em}.mw-parser-output .cs1-kern-right,.mw-parser-output .cs1-kern-wl-right{padding-right:0.2em}

^ abc M.R. Spiegel; S. Lipschutz; D. Spellman (2009). Vector Analysis (Schaum’s Outlines) (2nd ed.). McGraw Hill. ISBN 978-0-07-161545-7.

^ A I Borisenko; I E Taparov (1968). Vector and tensor analysis with applications. Translated by Richard Silverman. Dover. p. 14.

^ Arfken, G. B.; Weber, H. J. (2000). Mathematical Methods for Physicists (5th ed.). Boston, MA: Academic Press. pp. 14–15. ISBN 978-0-12-059825-0..

^ Weisstein, Eric W. "Dot Product." From MathWorld--A Wolfram Web Resource. http://mathworld.wolfram.com/DotProduct.html

^ T. Banchoff; J. Wermer (1983). Linear Algebra Through Geometry. Springer Science & Business Media. p. 12. ISBN 978-1-4684-0161-5.

^ A. Bedford; Wallace L. Fowler (2008). Engineering Mechanics: Statics (5th ed.). Prentice Hall. p. 60. ISBN 978-0-13-612915-8.

^ K.F. Riley; M.P. Hobson; S.J. Bence (2010). Mathematical methods for physics and engineering (3rd ed.). Cambridge University Press. ISBN 978-0-521-86153-3.

^ M. Mansfield; C. O’Sullivan (2011). Understanding Physics (4th ed.). John Wiley & Sons. ISBN 978-0-47-0746370.

^ Berberian, Sterling K. (2014) [1992], Linear Algebra, Dover, p. 287, ISBN 978-0-486-78055-9

External links

| Wikimedia Commons has media related to Scalar product. |

Hazewinkel, Michiel, ed. (2001) [1994], "Inner product", Encyclopedia of Mathematics, Springer Science+Business Media B.V. / Kluwer Academic Publishers, ISBN 978-1-55608-010-4

- Weisstein, Eric W. "Dot product". MathWorld.

- Explanation of dot product including with complex vectors

"Dot Product" by Bruce Torrence, Wolfram Demonstrations Project, 2007.

![{displaystyle {begin{aligned} [{color {red}1,3,-5}]cdot [{color {blue}4,-2,-1}]&=({color {red}1}times {color {blue}4})+({color {red}3}times {color {blue}-2})+({color {red}-5}times {color {blue}-1})\&=4-6+5\&=3end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/be560d2c22a074c7711ae946954725d31ec77928)

![{displaystyle {begin{aligned}mathbf {a} &=[a_{1},dots ,a_{n}]=sum _{i}a_{i}mathbf {e} _{i}\mathbf {b} &=[b_{1},dots ,b_{n}]=sum _{i}b_{i}mathbf {e} _{i}.end{aligned}}}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b154ac2bb09512c81d917db83c273055c093571f)